In the Fall of 2018, Eugene Santiago and Shao-Yu Chen conducted a small study to understand the strengths and weaknesses of PUBG Mobile’s post-game statistics screen. While informative, the study was largely qualitative, consisting of multiple in-depth user interviews. However, UX evaluations often go beyond qualitative interviews and user-submitted feedback. This article is an effort in compiling and discussing a (in no way exhaustive) list of existing measures, standards, heuristics, and models, both objective and subjective, that may prove useful in evaluating video game interfaces and processes.

User Interface Evaluation

User interfaces, by nature, can be complex systems, with large numbers of menus, call-to-action, and status indicators. Games add another layer of complexity by including/providing goals. For example, NPCs (Non-Player Characters) with whom a player must interact can be considered another layer of user interface. The player must choose with which NPC to interact or avoid.

In the UI evaluation of the more “conventional” part of the game UI, there are numerous heuristics, metrics, and principles that can help identify and understand user interfaces. The most famous example is perhaps Nielsen/Norman’s 10 Usability Heuristics. This set of heuristics is general, and applicable to games, and can be used to evaluate user cases or specific elements in heuristic evaluations, as demonstrated by researchers on the Nielsen/Norman website itself. However, the astute game UX researcher must also know which heuristics are applicable to their games. For example, the developers of Thomas Was Alone most likely would not require the Help and Documentation heuristic once the player begins the game, due to the familiar platformer controls and linear gameplay.

Thomas Was Alone, Bithell Games

Thomas Was Alone, Bithell Games

In addition to the Nielsen/Norman Heuristics, there are many more scales, metrics, and testing methods that can help in the evaluation of different aspects of user interfaces.

- The Systems Usability Scale (SUS) can help developers and researchers to understand the overall usability of a game via its 10 general questions to seek feedback from user testing. However, the SUS should not be relied on entirely to identify specific problems within the interface. Qualitative probing, direct observation, or another metric can be used to supplement the SUS.

- First Click Testing can identify problems with the clarity of the interface and understand the participant’s ability to use the interface in more complex genres such as strategy games (e.g. StarCraft or Age of Empire). For role-playing games that require players to navigate a virtual world with an avatar, the First Click Testing may even be adapted to understand the “First Path” users would take in the game world (e.g. Where would players first go in Dark Souls or CrossCode?)

Where to go...? CrossCode, Radical Fish Games

Where to go...? CrossCode, Radical Fish Games - The NASA-TLX (Task Load Index) scale can provide insights into the effort players must expend to deal with the challenges of the game, as many games are often fast-paced, with numerous events happening on the screen at the same time, e.g. when dealing with a large number of monsters in Path of Exile. Understanding the task loads during various moments of the game, and the reason for the user overload can aid the adjustment of the user interface or game pacing to balance between difficulty and usability.

Evaluation of “Play”

In addition to metrics to evaluate UI, the fields of Human Factors, Human-Computer Interaction, Engineering Psychology, and Ergonomics have concepts and models that can assist in elements of game design beyond interactions with buttons and menus.

One concept in game design is the “gameplay loop,” or the set of actions or game mechanics with which a player will engage over the course of the game. Using Capcom’s Monster Hunter World as an example, a player’s explicit objective is to hunt monsters. In the process of hunting monsters, players will receive materials to create stronger equipment. Using better equipment, players can proceed to hunt stronger monsters, which provides stronger material. Thus, the gameplay loop of Monster Hunter World can be broken down into three basic steps:

Hunt Gather Craft

Hunt Gather Craft

Monster Hunter World, Capcom

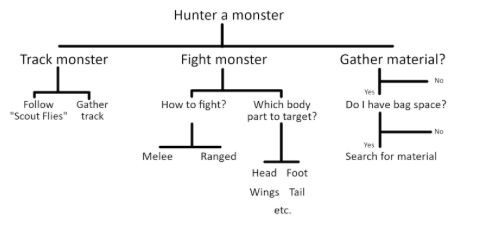

Monster Hunter World and many other games usually contain much more details than three simple steps, and understanding the relationship of all the different actions, decisions, and game mechanics can be difficult. One approach to breaking down all the elements of a gameplay loop is the Hierarchical Task Analysis (HTA). HTA lists out the goals of a user in a system, and all the relevant steps to accomplish said goals. The breakdown of tasks from HTA can help researchers understand where users are having problems, and help game designers optimize the gameplay loop.

Sample HTA diagram, using the Hunt step of the gameplay loop in Monster Hunter World

Sample HTA diagram, using the Hunt step of the gameplay loop in Monster Hunter World

Another method of analyzing gameplay is using the GOMS (Goals, Operators, Method, and Selection Rules) model developed in 1985 by Stuart K. Card, Allen Newell, and Thomas P. Moran. Using GOMS, researchers can build a visual representation of the components of a gameplay loop, along with all the relevant actions to achieve a gameplay goal. In GOMS, a Goal is the desired situation or state to be achieved by the users; a Method contains Operators (any physical, procedural, or cognitive actions) to achieve a Goal; and users apply Selection Rules to choose which method to achieve the Goal.

GOMS is also incredibly adaptable, and there are already many variances of the original GOMS model. For example, the Touch Level Model (or TLM, Andrew D. Rice, Jonathan W. Lartigue. 2014) can be used to analyze touch interfaces, which can prove useful for the many mobile games. Notably, TLM uses a specific set of Operators to describe mobile interactions:

Distraction (X)

Gesture (G)

Pinch (P)

Zoom (Z)

Initial Act (I)

Tap (T)

Swipe (S)

Tilt (L(d))

Rotate (O(d))

Drag (D)

Theoretical models such as HTA and GOMS can prove useful as both design tool or a vehicle for analysis. If used early enough, HTA and GOMS can help game designers model and visualize game mechanics and expected actions to anticipate potential problems. If used in conjunction with user testing, researchers can use HTA and GOMS to build the actual process and approaches players to use in-game and identify pain points experienced during gameplay.

While this article by no means provides an exhaustive list of concepts that can be applied to game UX research, it is hoped that this article has demonstrated the value of different metrics and concepts for evaluating video game interfaces and processes. In addition to Human-Computer Interaction, the fields of Human Factors, Engineering Psychology, Ergonomics, and more can all be rich sources of theoretical basis to understand or analyze the interaction between players and games.

READ MORE: The Social Experience of Digital Reality Use, Designing for Millennials vs Baby Boomers, Gestures Over Buttons, AR Helmets and "Riders" Distraction

Comments

Add Comment