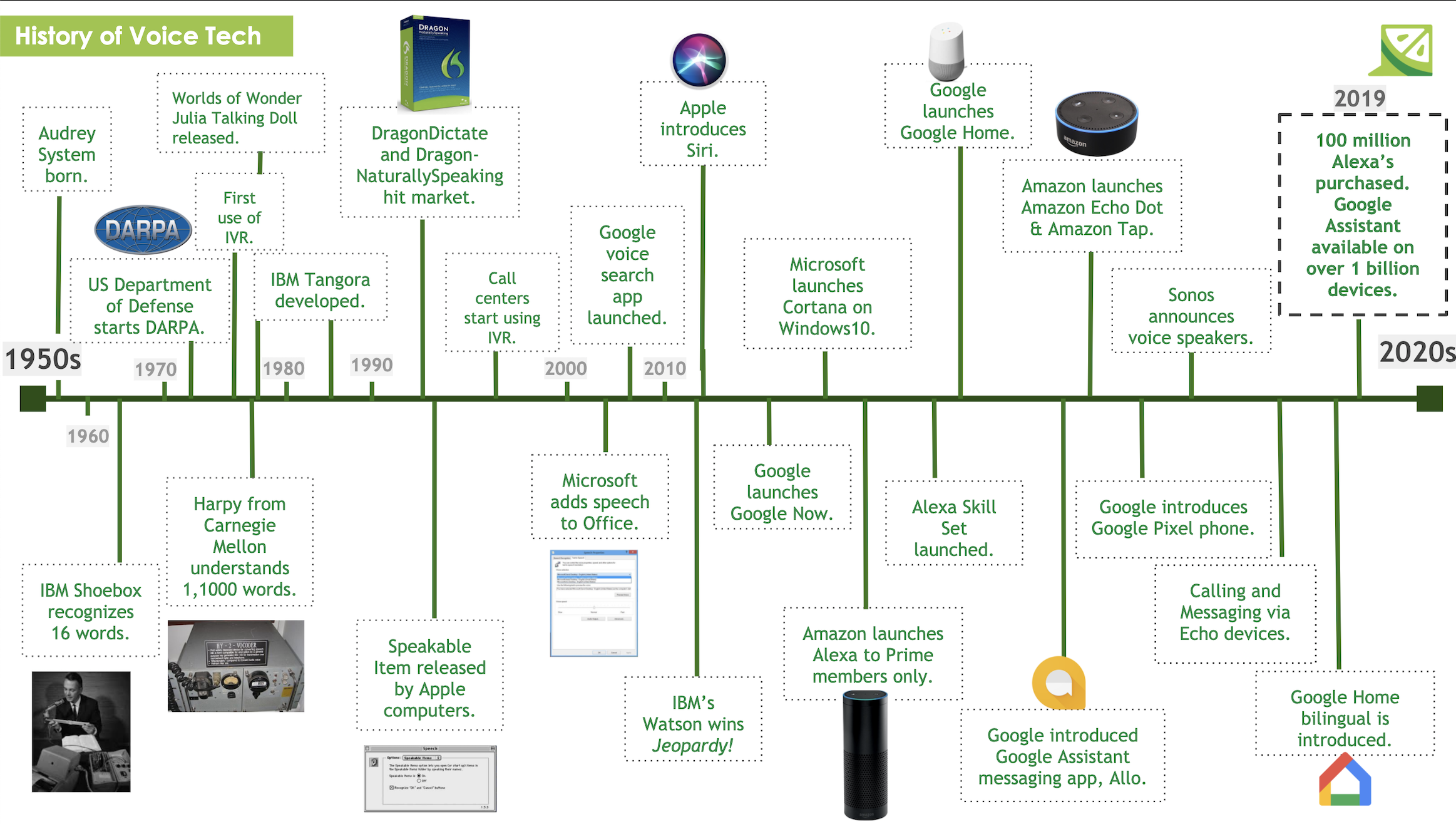

While many feel as though voice technology is a newer innovation. However- the study, development, and implementation of voice and speech recognition technologies has been going on for the last 70 years. This article attempts to provide an overview of the history of voice technology, and how it has developed since its creation. In 1952, the first speech recognition system designed by Bell Laboratories was known as the “Audrey” system and could only recognize single voice digits spoken aloud (source). “The machine understood digits 0-9 is speakers paused in between, though it would have to adapt to each user before it could capture their speech with reasonable accuracy” (source).

The Birth of Voice Technology

It was thought that Audrey could be utilized for hands-free telephone dialing, however, Audrey ultimately lacked mass-market appeal due to its large size, power requirements, and production and maintenance costs (source). It would be almost ten years later before IBM introduced their “Shoebox” which was able to understand and respond to 16 spoke words in English as well as understand numbers 0-9. “Like Audrey, the device attempted to recognize and act on the specific frequency of the vowels in each spoken digit” (source).

In the 1970s, the US Department of Defense Advanced Research Project Agency began the Speech Understand Research program (SUR) which focused on developing and researching speech recognition technology at Carnegie Mellon University. The goal of DARPA at this time was to develop a speech recognition technology that could understand up to 1,000 words. As a result of the research and work conducted by SUR during the 1970s, Carnegie Mellon was able to develop their “Harpy” speech system in 1976, which understood over 1,000 English spoken words. “Harpy processed speech that followed pre-programmed vocabulary, pronunciation, and grammar structures. Like the voice assistants available in 2018, Harpy returned an “I don’t know what you said, please repeat” message when it couldn’t understand the speaker” (source). Once again, Harpy was still limited in its ability to understand natural language. Additionally, in the late 1970s, the first commercial application of (interactive voice response) IVR, designed and developed by Steven Shmidt, launched. IVR systems are computer automated telephone systems that use specialized telephone hardware and manipulation of a digitized voice.

It was then during the 1980s that the development of the Hidden Markov Model helped further the research and development of voice technology by using statistics to “determine the probability of a word originating from an unknown sound” (source). This statistical method was a breakthrough because “instead of just using words and looking for sound patterns, the HHM estimated the probability of the unknown sounds being words” (source). The 1990s saw many technological advancements, including consumers having broader access to both personal computers and speech recognition technologies. DragonDictate, developed by Dr. James Baker, is the first consumer speech recognition product utilizing discrete dictation methods, which required the user to pause in between each spoken word. Later in 1997, Dragon NaturallySpeaking, the first continuous speech recognition product available for consumers, entered the market. Dragon NaturallySpeaking was able to recognize and transcribe natural human speech, at a rate of about 100 words per minute. Dragon NaturallySpeaking did not require users to pause between each word like Dragon Dictate did. “By pioneering continuous speech recognition, Dragon made it practical for the first time to use speech recognition for document creation” (source). Dragon NaturallySpeaking is still available for download and is utilized by individuals like medical professionals. Additionally, in the 1990s, call centers began investing in computer telephony integration (CTI) with IVR systems, which was the birth of the automated phone call. Speakable items, the first built-in speech recognition, and voice-enabled control software for Apple computers, was featured as being a part of Mac computers. In the early 2000s, Microsoft released a similar feature on its computers.

Recent Voice Technology Developments

From the 2010s to now, the growth of research and development and implementation of voice technology has skyrocketed. The decade started off with IBM’s Watson, a computer answering system capable of understanding natural language, beat Jeopardy! Grand champion Ken Jennings on TV. Later that year, Apple introduced Siri across all of its mobile devices. Once Siri was launched, the implementation of natural language speech recognition technology across all companies and devices just took off. In 2013, Microsoft introduced Cortana, a virtual assistant similar to Siri, that was going to be implemented across all Windows devices. Soon after, Amazon introduced the Alexa device for Prime members only. In 2015, Amazon was launched in the United States, with Google Home being launched the following year. Now, we see speech enacted virtual assistants as commonplace both in our homes and our cars. In 2020, the U.S. smart speaker user base increased by 32% since last year, now standing at 87.7 million adults, and total in-car voice assistant users totaling almost 130 million in the U.S, with 83.8 million users active monthly (source).

We at Key Lime Interactive are eager to see the ways in which voice technology continues to develop, so stay tuned for more voice technology content!

Looking to improve your voice experience? Email us at info@keylimeinteractive.com.

READ MORE: Voice Technology is Here, Voice as Assistive Technology, What Are We Talking About? Voice Technology Terms You Need to Know, Voice Tech Takeaways from Voice Global

Comments

Add Comment